Awesome Egocentric

exocentric (third-person) and egocentric (first-person)

Survey

- Bridging Perspectives: A Survey on Cross-view Collaborative Intelligence with Egocentric-Exocentric Vision

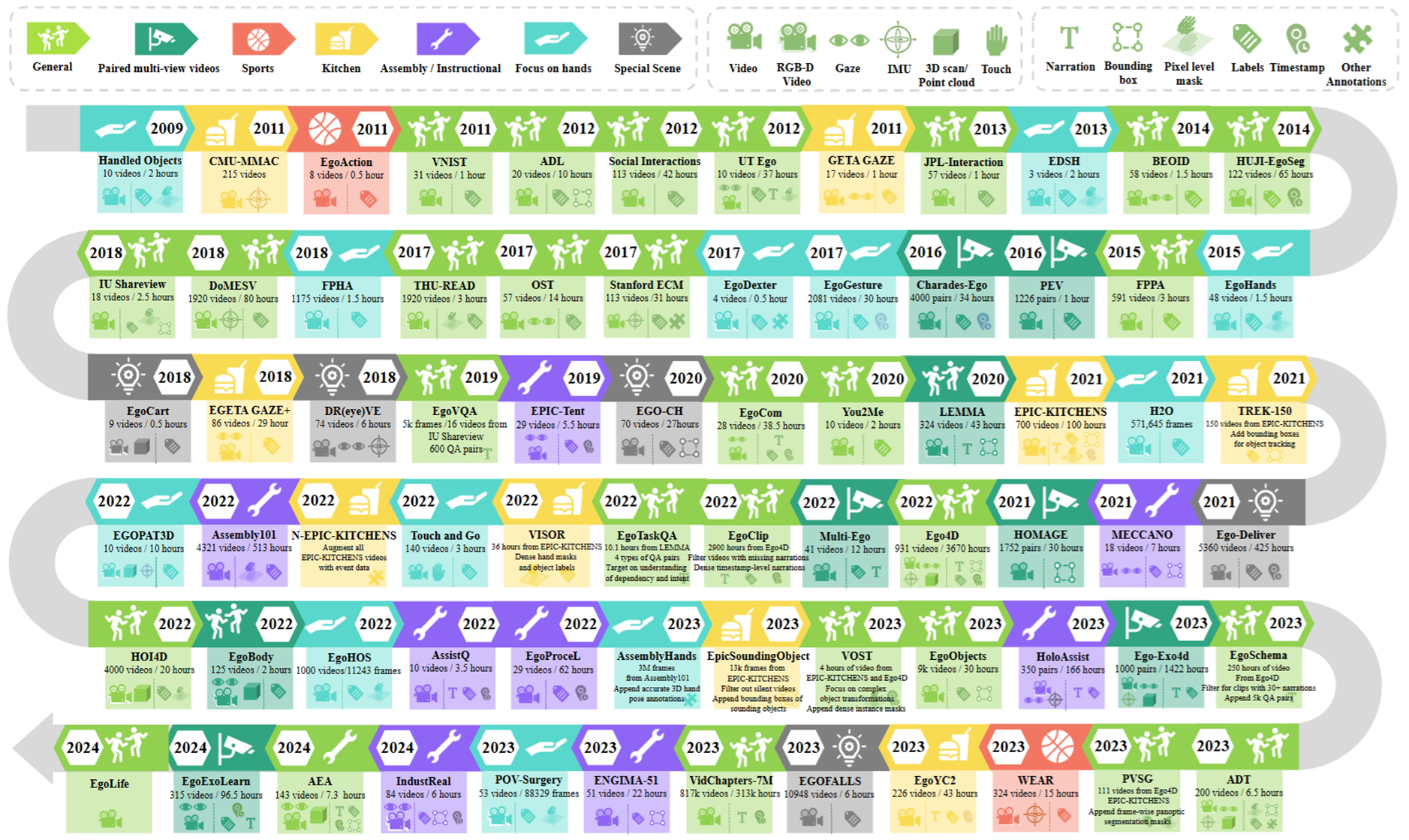

Datasets

| main category | sub-category | title | publish | comment | Code Avaliable | Computing Resource |

|---|---|---|---|---|---|---|

| Egocentric Video Understanding | Egocentric Video Grounding | Rgnet: A unified clip retrieval and grounding network for long videos | ECCV 2024 | has code | 4 NVIDIA-RTX-A6000 | |

| Grounded question-answering in long egocentric videos | CVPR 2024 | has code | 4 NVIDIA A100 (80GB) | |||

| Snag: Scalable and accurate video grounding | CVPR 2024 | has code | ||||

| Object-Shot Enhanced Grounding Network for Egocentric Video | CVPR 2025 | has code | ||||

| Egocentric Video Captioning | Retrieval-Augmented Egocentric Video Captioning | CVPR 2024 | has code | |||

| Egocentric Video Retrieval | EgoCVR: An Egocentric Benchmark for Fine-Grained Composed Video Retrieva | ECCV 2024 | has code | |||

| Sound Bridge: Associating Egocentric and Exocentric Videos via Audio Cues | CVPR 2025 | has code | 8 V100 GPUs | |||

| Egocentric Videos Mistake Detection | Gazing Into Missteps: Leveraging Eye-Gaze for Unsupervised Mistake Detection in Egocentric Videos of Skilled Human Activities | CVPR 2025 | no code | |||

| Egocentric Environment Understanding | DIV-FF: Dynamic Image-Video Feature Fields For Environment Understanding in Egocentric Videos | CVPR 2025 | Dynamic Object Segmentation, Affordance Segmentation | has code | 1 NVIDIA 4090. | |

| Egocentric Motion and pose estimation | EgoPoseFormer: A simple baseline for stereo egocentric 3D human pose estimation | ECCV 2024 | has code | |||

| Egocentric Whole-Body Motion Capture with FisheyeViT and Diffusion-Based Motion Refinement | CVPR 2024 | has code | ||||

| REWIND: Real-Time Egocentric Whole-Body Motion Diffusion with Exemplar-Based Identity Conditioning | CVPR 2025 | has code | NVIDIA RTX8000 | |||

| Natural Language-based Egocentric Task Verification | PHGC: Procedural Heterogeneous Graph Completion for Natural Language Task Verification in Egocentric Videos | CVPR 2025 | agents to determine if operation flows of procedural tasks in egocentric videos | has code | ||

| Open-world 3D Segmentation | EgoLifter : Open-world 3D Segmentation for Egocentric Perception | ECCV 2024 | has code | 1 NVIDIA A100 (40GB) | ||

| Audio-Visual Localization | Spherical World-Locking for Audio-Visual Localization in Egocentric Videos | ECCV 2024 | no code | |||

| Social Role Understanding. | Ex2Eg-MAE: A Framework for Adaptation of Exocentric Video Masked Autoencoders for Egocentric Social Role Understanding | ECCV 2024 | no code | |||

| Egocentric Gaze Anticipation | Listen to Look into the Future: Audio-Visual Egocentric Gaze Anticipation | ECCV 2024 | ||||

| egocentric visual grounding | Visual Intention Grounding for Egocentric Assistants | ICCV 2025 | ||||

| Egocentric Motion | Action Recognition | Masked Video and Body-worn IMU Autoencoder for Egocentric Action Recognition | ECCV 2024 | has code | ||

| Multimodal Cross-Domain Few-Shot Learning for Egocentric Action Recognition | ECCV 2024 | has code | ||||

| Temporal Action Segmentation | Synchronization is All You Need:Exocentric-to-Egocentric Transfer for TemporalAction Segmentation with UnlabeledSynchronized Video Pairs | ECCV 2024 | has code | |||

| egocentric activity recognition | ProbRes: Probabilistic Jump Diffusion for Open-World Egocentric Activity Recognition | ICCV 2025 | ||||

| Hand Pose Estimation & Reconstruction | 3D Hand Pose Estimation in Everyday Egocentric Images | ECCV 2024 | has code | 2 A40 | ||

| HaWoR: World-Space Hand Motion Reconstruction from Egocentric Videos | CVPR 2025 | has code | 4 NVIDIA A800 | |||

| Motion Segmentation | Layered Motion Fusion: Lifting Motion Segmentation to 3D in Egocentric Videos | CVPR 2025 | no code | 1 NVIDIA RTX A4000 | ||

| Motions LM | EgoLM: Multi-Modal Language Model of Egocentric Motions | CVPR 2025 | no code | |||

| Human Motion Capture | Ego4o: Egocentric Human Motion Capture and Understanding from Multi-Modal Input | CVPR 2025 | no code | |||

| Object Manipulation Trajectory | Generating 6DoF Object Manipulation Trajectories from Action Description in Egocentric Vision | CVPR 2025 | has code | same as PointLLM | ||

| Others | Egocentric Interaction Reasoning and pixel Grounding (Ego-IRG). | ANNEXE: Unified Analyzing, Answering, and Pixel Grounding for Egocentric Interaction | CVPR 2025 | Referring Image Segmentation (RIS), Egocentric Hand-Object Interaction detection (EHOI), and Question Answering (EgoVQA). Egocentric | no code | 4 NVIDIA 6000Ada |

| Generation of Action Sounds | Action2Sound: Ambient-Aware Generation of Action Sounds from Egocentric Videos | ECCV 2024 | has code | |||

| 3D Human Mesh Recovery | Fish2Mesh Transformer: 3D Human Mesh Recovery from Egocentric Vision | ICCV 2025 | ||||

| Egocentric Datasets | Nymeria: A Massive Collection of Multimodal Egocentric Daily Motion in the Wild | ECCV 2024 | Motion tasks, Multimodal spatial reasoning and video understanding | |||

| Composed Video Retrieva | EgoCVR: An Egocentric Benchmark for Fine-Grained Composed Video Retrieva | ECCV 2024 | ||||

| Synthetic Data about Hand-Object Interaction Detection | Are Synthetic Data Useful for Egocentric Hand-Object Interaction Detection | ECCV 2024 | ||||

| Body Tracking | EgoBody3M: Egocentric Body Tracking on a VR Headset using a Diverse Dataset | ECCV 2024 | ||||

| 3D Hand and Object Tracking | HOT3D: Hand and Object Tracking in 3D from Egocentric Multi-View Videos | CVPR 2025 | ||||

| HD-EPIC: A Highly-Detailed Egocentric Video Dataset | CVPR 2025 | VQA | ||||

| Ego-exo4d: Understanding skilled human activity from first-and third-person perspectives | CVPR 2024 | |||||

| EgoPressure: A Dataset for Hand Pressure and Pose Estimation in Egocentric Vision | CVPR 2025 | |||||

| Omnia de EgoTempo: Benchmarking Temporal Understanding of Multi-Modal LLMs in Egocentric Videos | CVPR 2025 | |||||

| EgoTextVQA: Towards Egocentric Scene-Text Aware Video Question Answering | CVPR 2025 | |||||

| RoboSense: Large-scale Dataset and Benchmark for Egocentric Robot Perception and Navigation in Crowded and Unstructured Environments | CVPR 2025 | a suite of long-context, life-oriented question-answering tasks | ||||

| EgoLifeQA | EgoLife: Towards Egocentric Life Assistant | CVPR 2025 | ||||

| Generation of Action Sounds | Action2Sound: Ambient-Aware Generation of Action Sounds from Egocentric Videos | ECCV 2024 | ||||

| egocentric video grounding | Fine-grained Spatiotemporal Grounding on Egocentric Videos | ICCV 2025 |

Words and Sentences

spur 激励,鼓励;促进 cross-modal heterogeneity and hierarchical misalignment challenges dub 把……称为,给……起绰号 Literature on xxx is rich

随笔

https://arxiv.org/pdf/2508.07683 RL-GRPO增强VG

ego

https://github.com/OpenGVLab/EgoVideo/tree/main/backbone

https://github.com/houzhijian/GroundNLQ/blob/main/feature_extraction/README.md

gaze

- In the Eye of MLLM: Benchmarking Egocentric Video Intent Understanding with Gaze-Guided Prompting

- GazeNLQ @ Ego4D Natural Language Queries Challenge 2025

- Global-Local Correlation for Egocentric Gaze Estimation